Cleft Closure 2.0

A Learning and Planning App for Future Cleft Surgeons

Cranio-maxillofacial (CMF) surgeons who want to specialize in cleft surgery as well as the interdisciplinary operation team should be able to participate in the operation-planning of cleft lip and cleft palate patients (CLP). So far, there are various forms of web or smartphone applications that summarize the knowledge of the CLP-surgery, but they do not account for current patient examples. In an interactive application, especially for trainees should be given the opportunity to revise their knowledge in a virtual 3D space and using current cases to practice the planned surgical intervention. An easy-to-use technology comes to the fore to facilitate interdisciplinary discourse. Trainees benefit especially from the experience of professionals, such as the cooperation partner Prof. Dr. med. Dr. dent. Katja Schwenzer-Zimmerer from the University Hospital Graz.

In the present work a tool should be developed integrating current patient data into the application so that an image about the result can be formed. The 3D model will include the categories of cleft-characteristics categorized by the medical LCD-10 Classification “LAHSHAL” and thus should provide a schema for the individual case application. The design of the model or the application shall provide an interface between surgical diagnostic procedures and medical training media and thereby enable to transfer knowledge contents to the current situation.

Points of Orientation

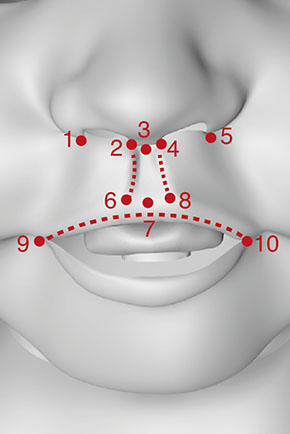

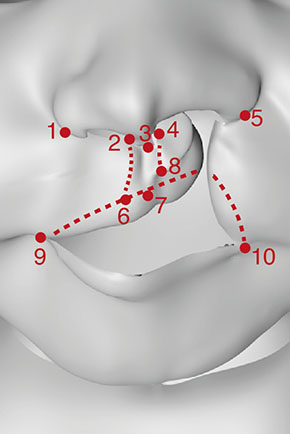

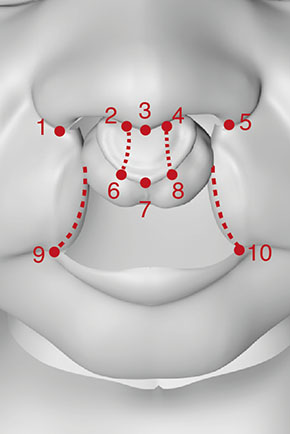

In Cranio-Maxillo-Facial-Surgery the doctors define the characteristic of a cleft lip after the positions of the landmarks according to a normal baby face.

Here you can see two kinds of cleft lip (one and both sides affected) and in comparison the normal positions of the landmarks.

Many CMF-Surgeons use the landmarks to plan the operation-strategy in changing them into a pattern.

How to integrate the data in the App?

The most difficult question for my project is, how to integrate the patient‘s data into an existing animation. I have read a lot about motion capture and I was thinking to invert this system in changing the appearance of a certain animated model instead of animating certain points after an puppeteer. Maybe it is possible, that several points, integrated in a prepared mesh can affect areas in the modified appearance.

How to modify the model?

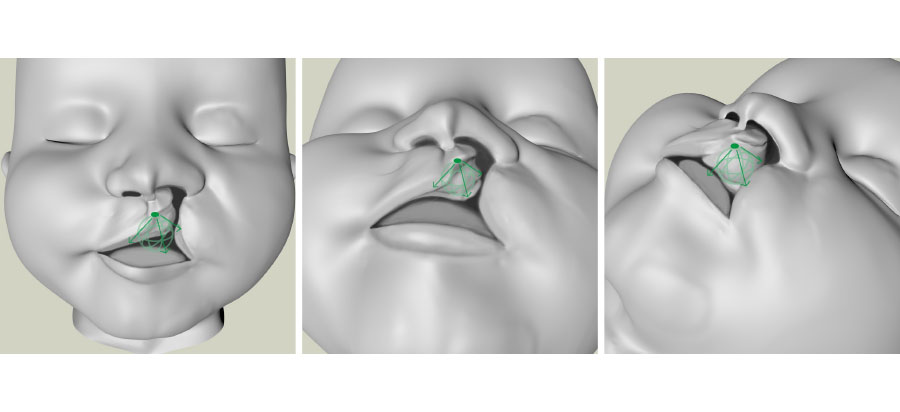

Here – for example – a special landmark (Peak of cupid`s bow) and the possible movement. Using this tool, the young surgeons can change the 3D-model intuitively with the finger directly on the screen.

In turning the model and moving the point you can change the model to the appearance of the cleft of the patient. You can see this Idea below.